What is Hadoop?

What is Hadoop?

Apache Hadoop is the framework that has the computing power to store and compute unstructured data and structured data in a distributed environment. The two popular sub-projects of Hadoop are Hadoop MapReduce and HDFS. Other sub-projects of Hadoop are Hive, Hbase, Sqoop, Zookeeper, Flume, and Mahout. Hadoop application is widely used in financial services, Insurance, banking, retail market, telecommunications, healthcare, life sciences, media, and entertainment. Different types of Hadoop services are analytics, Hadoop services application software, SQL layer, Machine Learning, and Hadoop services performance monitoring software.

Table of Content

1. Evolution of Hadoop

Hadoop has a long history as a powerful distributed computing system. The history of the Google search engine and Hadoop is paired with one another. Google, Nutch, and Hadoop are the open-source projects which are used for web crawling and distributed computing. Hadoop was created in the year 2005 and it was developed along with the Nutch search engine project.

The distributed computing part of the system became Hadoop. Web crawling is the Nutch open source project used along with Google. Hadoop illuminated the analytics market with key players like Microsoft, IBM Corporation, AWS, Cloudera Inc., and Teradata Corporation. Structured data, semi-structured data and unstructured data from the data lake of the company for the decision making process. Cloud provides a lower cost for storing the data and multiple vendors offer Hadoop distribution for big data.

Big data growth is gauged with data security, data governance and data deployment with Hadoop systems. In the year 2002, the Apache Nutch project demanded indexing one billion pages. In the year 2003, GFS which is Google’s File System solved the problem of storing large files through the process of web crawling and indexing. Open source technology is a model which develops the technology through the community.

So, GFS and MapReduce introduced as an open-source project in the Apache Nutch project. Hadoop introduced with a popular project called HDFS and MapReduce and incorporated with sub-projects later.

2. Architecture of Hadoop

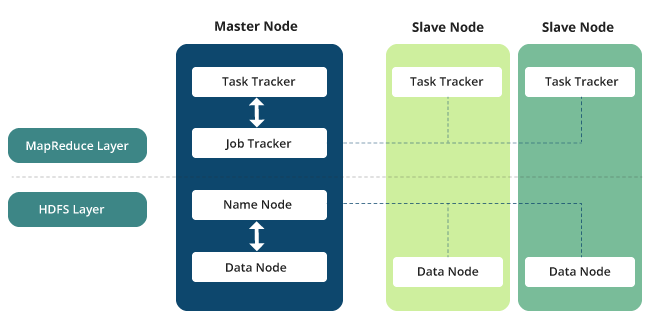

The architecture of Hadoop is a master-slave architecture which aid for the data storage and data processing with distribution under MapReduce and HDFS methods. Hadoop clusters are suitable for the analysis of big data as it deals not only with the actual data but the logic behind the data. The efficient model of Hadoop requires good design in terms of computing power, storage and networking. Let us discuss the architecture of Hadoop from nodes of Hadoop, HDFS and MapReduce.

2.1 Nodes of Hadoop

The Architecture of Hadoop consists of four nodes they are name node, a master node, data node, and slave node. Name node is for the files in the namespace and directory in the namespace. Data node is the interaction part which manages the HDFS node and blocks. The Master node helps to process the data of MapReduce. Slave node is used for the complex calculations which are stored in the additional machines.

Hadoop cluster completes the tasks with the data centre, node and the rack. The different types of nodes handle different types of jobs. Racks are under data centre and nodes are handled under the rack. The distance between the nodes forms the tree structure and Hadoop cluster. It is difficult to measure the bandwidth in case of Hadoop as it handles a big volume of data and thus the network is represented with tree and the distance between the nodes.

2.2 HDFS Cluster

Bandwidth availability reduces if there is the same node processes, same rack process with different nodes, and the same data center process with different nodes, different data centers with the same nodes. HDFS cluster manages the Name node, Metadata, data nodes, and actual data. The name node consists of the metadata and the location of the data nodes is redesigned every time the system gets operated.

The location of the nodes is not persistent and it is reconstructed. Data node consists of actual data and it is the slave for the master. It delivers the process of reading, writing and handling requests of the clients. JAVA classes are used to interact with the Hadoop’s file system and the file API of Hadoop accepts the generic requests which can be useful for the interactions.

The Command-line interface is a simple platform to interact with HDFS. Operations like creating directories, deleting data, listing directories, moving files, and reading the files, are done using the command-line interface.

2.3 MapReduce

MapReduce of Hadoop handles the processing of data with languages like Java, Python, C++, and Ruby. The two phases of MapReduce are the Map phase and reduce phase. Map Phase take care of splits and mapping. Reduce tasks take care of shuffling and reducing. The job tracker and multiple task trackers take care of the execution process. Job tracker takes responsibility for the coordination and runs the tasks on different data nodes. Task tracker takes note of the multiple tasks and prepares the report card for the job tracker.

It there is a failure then job tracker reschedules the task with task tracker. There are two types of join operations in Hadoop and they are map side join and reduce side join. Map side join is one of the processes in the Map phase which handles two tables without using the reduce phase. This is called a join operation and it is executed very fast by loading the join into the memory. Reduce side join means the operation which consists of join operation in the reducer phase. Here the reducer receives the key list from mapper after the sorting and shuffling. Reduces take responsibility for the values and join operations to give the final output.

There are two types of Hadoop built-in counter to handle the collection of information they are Hadoop built-in counter and user-defined counters. MapReduce task counter, filesystem counter, file input format counter, file output format counter, and job counters are the different counters in Hadoop built-in counter. User-defined counters are used for similar functionalities and programming languages like Java ‘enum’ are used for this counter.

3. Different releases of Hadoop

Hadoop is first released as MapReduce, HDFS, and Hadoop common. Hadoop 2.0 came with Apache Hadoop YARN which handles resource management and job scheduling. YARN operates between HDFS and Hadoop processing engines to support job scheduling. IT handles the process with the first-in-first-out method and other methods to assign resources to the clusters. The next version of Hadoop 3.0.0 added new features to YARN such as processing 10,000 node limit, support GPU and aid for erasure coding. Hadoop 3.1 and 3.2 introduced the usage of YARN inside Docker and the new version consist of two new components. The two components are Hadoop submarine, Hadoop Ozone object which aids for machine learning and on-premises systems.

3.1 HDFS

HDFS is suitable for processing data and distributed storage. The command interface from Hadoop will interact with HDFS. The name node and data node aid for knowing the status of the cluster. Access to the data from the file system, file permissions and authentication is provided by HDFS.

3.2 YARN

The full form of YARN is ‘yet another resource negotiator’ and it introduced along with Hadoop 2.0. The resource management layer is separated from the processing layer. It makes use of graph processing, interactive processing, stream processing, and batch processing to process the stored data in HDFS. Scalability, cluster utilization, multi-tenancy, and compatibility are the features of YARN.

4. Use Cases of Hadoop

Many organizations adopt Hadoop as it is driven by analytics and fuel the business analysis. Tools related to processing aid for the fast process of Hadoop. As per one report, 80 percent of the fortune 500 companies will adopt Hadoop by the year 2020. Let us see some of the use cases of Hadoop.

4.1 Financial Sector

Using the right architecture for Hadoop will increase the efficiency of Hadoop. Hadoop is widely used in the financial sector, Telecom industry, Healthcare sector, and retail sector. Let us see the benefits and industrial exposure with Hadoop. Hadoop is the complex technology that supports big data. To have a broader understanding let us illuminate the usage of Hadoop with use cases.

JPMorgan which is one of the biggest organizations in the financial services uses the Hadoop framework for managing the big size of data and for processing unstructured data. Insurance companies have framed policies which are suitable for the different group of users with the help of Hadoop. Royal Bank of Scotland used Hadoop for understanding customer behavior which improved customer interactions. Apart from competitions understanding customer satisfaction is very crucial for which this bank spent 100 billion dollars.

4.2 Telecom industry

The Hadoop system from the cloud era manages the structured data of 100 TB and semi-structured data of 500+ TB in the case of Nokia. For Telecom industry records of calls, maintaining data of telecom, introducing new products, planning, and analytics for network traffic are taken care of by the Hadoop system.

4.3 Web Services Company

Yahoo manages machine data of 150 terabytes which is a challenging task. Hunk is one of the tools of Hadoop which is used for data analytics. Yahoo Company uses the largest Hadoop cluster with 4500 nodes, 40,000 servers and 100,000 CPUs for its operations. The value-added packages are extended to the customers by Yahoo with Hadoop analytics.

4.4 Healthcare Sector

The role of Hadoop in the health care industry is to minimize the cost, cure the diseases, improve the profits, enhance the quality, and predict the epidemics. Sensors and data are used to manage the tasks in the Health sector.

4.5 Retail Sector

In the case of retail industry or e-commerce industry Hadoop forecast the inventory, fix the dynamic prices of the products, manage the supply chain, conduct marketing, and do fraud detection.

4.6 Hotel Industry

In the case of the hotel industries where they use the NoSQL database and Apache Cassandra conversion rate had a significant difference after the usage of Hadoop. The real-time case studies give real-time exposure to the usage of Hadoop and commercially Hadoop has its footprint in multiple industries.

5. Features of Hadoop

5.1 Open-source

Open source system helps for learning and modification of code.

5.2 Distributed processing

Hadoop handles structured data and unstructured data with the help of clusters and processes the data-parallel with nodes on the cluster.

5.3 Fault tolerance

Failure of nodes or tasks is managed through the replicas in each block. By default, if there is a failure then it is managed with other nodes easily.

5.4 Availability

Hadoop consists of multiple replicas of data and multiple paths for the network. If there is a failure with data or network then it is managed which leads to high availability.

5.5 Scalability

New hardware added easily to the nodes. New machines are easily extended to the Hadoop cluster which aids for horizontal scalability.

5.6 Computing power

Hadoop has more nodes and more computing power for the different types of data. Fast processing with high value is highly demanded to get optimized performance.

Multiple domains depend upon the customers and to manage the challenges happening with businesses Hadoop is one of the top technology used. From horizontal and vertical analysis many industries require Hadoop. Horton and cloud era merged with $52 billion value. Insurance and finance industry requires 19 per cent of jobs. Overall demand for a data scientist is almost 59 per cent. Hadoop is the technology which is going to dominate the future.